ChatGPT, Bard and Language Models at Owlin

February 14, 2023 – Written by Floris Hermsen

It’s been impossible to miss: AI has gone mainstream! For a few weeks now, OpenAI’s ChatGPT has been all the rage on Twitter, LinkedIn, and the news. And with Microsoft now acquiring a 49% stake in OpenAI and Google launching their own AI-powered chatbot Bard, the AI wars in Big Tech have officially begun! The seemingly human-like responses of these chatbots have captured the imagination all around the world, disrupting journalism, content creation, education, web search, and more. The language models that power them, though, have already been around for quite a while. And at Owlin, you can find them in our machine learning stack — a lot! We use them for translation, finding entities in text, measuring document similarity at scale, even writing event summaries from scratch, and much more! But let’s first unpack why we even need all these capabilities.

At Owlin, we analyze the global news and other alternative data for large players in the financial sector with a focus on third-party risk management, strategic insights, KYC, and ESG. Our clients need to know when one of their suppliers, clients, investments or merchants is at risk of going bankrupt, involved in litigation, about to be acquired, or one of many other important signals that we track. To that end, we analyze hundreds of thousands of news articles from all over the world in many different languages, every single day. This is way too much for human analysts to process, so we use natural language processing (NLP) and various other types of machine learning to extract all relevant data points from these news articles. And large language models (LLMs), such as those powering ChatGPT, play a vital role in our NLP pipeline.

In essence, language models are huge neural networks that have been trained on massive amounts of text from the internet, books, publications, and other sources. They can be trained unsupervised by randomly removing parts of the input text and training the model to fill in the blanks. This results in models that have understanding of syntax and semantics, both are crucial for understanding natural language. And they keep getting bigger and better!

What makes language models so revolutionary compared to older methods, is their ability to combine very deep neural network architectures with incredible amounts of training data, and a recent machine learning innovation called attention mechanisms. Through attention, the key ingredient of so-called transformer architectures, language models learn which words in a text form relevant combinations, enabling them to learn complex patterns and relationships. This is how they outperform earlier neural network architectures such as LSTMs, as well as the more rule-based approaches that have been around for decades.

At Owlin, we use a combination of the best available open source language models, as well as a few that we finetune ourselves. Language models are great for when you want best-in-class performance for common tasks that do not change a lot over time, such as translation, entity recognition, and generating document identity fingerprints. And if you want to generate high-quality, human-readable text, generative language models (like those that power ChatGPT) are the only game in town.

Rule-based algorithms still have their place in certain NLP applications, however, because the increased performance of language models comes at a cost. Language models are expensive to train, contain hidden biases, are hard to incrementally adapt and it’s often hard to explain why a language model produces a certain outcome (the black box problem). Because we operate in a regulated industry, explainability and adaptability are key requirements for some of our core capabilities, such as finding and interpreting risk signals in the worldwide news. But we still would like to leverage the power of language models to improve the quality of these systems. How does one go about balancing these priorities?

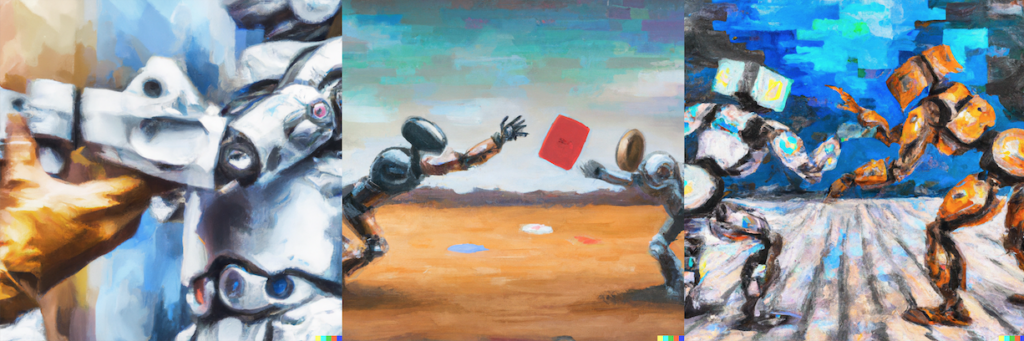

This is where we at Owlin get really creative by coming up with hybrid systems. For instance by using a language model to suggest new risk triggers to look for in the news. Or using a language model to approve or reject the output of a rule-based system, making the composite system much more maintainable and explainable. This is where we as data scientists and machine learning engineers get to create unique, best-in-class systems to power our product.

So with the AI space developing this rapidly, what is next? A next possible step could be the integration of generative language models with other intelligent systems, for instance by teaching them how to reason over facts and relationships from knowledge graphs. This would make the models less dependent on their training data to distill knowledge from, and make it easier to put them to use in a setting where up-to-date information is key. Rest assured that both OpenAI and Google are working on this as we speak. And you can count on us at Owlin to follow all the latest developments and keep our own innovations going strong to ensure the quality of the insights we serve!